Authored by Colin Pawsey, an Automotive IQ and Wind Energy IQ writing consultant on trends and technologies in the automotive and wind energy sector. This is one in a series of periodic guest columns by industry thought leaders.

Autonomous technology is set to transform the motor industry, but there are no clear paths for manufacturers. As the autonomous mobility industry takes shape, artificial intelligence could play a much bigger role.

The Society of Automotive Engineers uses a classification system of 5 levels of vehicle autonomy based on the amount of necessary driver intervention. Level 1, for example, requires a driver to be in control at all times but allows for automated acceleration and braking. Level 5 requires no human intervention. Most automakers are on the cusp of introducing Level 3 and 4 autonomous vehicles.

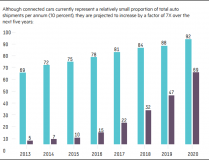

The Self-Drive Act passed in September will establish key deadlines for how these technologies will make their way onto the streets and highways. It will allow stakeholders to put up to 100,000 autonomous vehicles on the road for testing purposes by 2021.

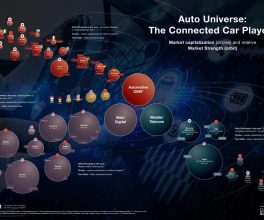

The pace at which the technology is developing has created an environment where traditional manufacturers risk being overtaken by tech firms. This is pushing cross-industry collaboration.

Self-driving systems are now based on complex algorithms, cameras and Lidar sensors to create a digital world that helps autonomous vehicles orient their position on the road and identify other vehicles, cyclists and pedestrians. Such systems are incredibly difficult to design and produce. They must be programmed to cope with an almost limitless number of variables found on roads.

As the industry looks to machine-learning as the basis for autonomous systems, artificial intelligence could be the next big disruptor.

AI the Driving Force

Earlier this year, Apple Chief Executive Tim Cook described the challenge of building autonomous vehicles as “the mother of all” AI projects.

That is because huge amounts of computing power are required to interpret all of the data harvested from a range of sensors and then enact the correct procedures for constantly changing road conditions and traffic situations.

This is where deep learning systems can be ‘trained’ to drive and develop decision-making processes like a human.

Deep learning is a set of algorithms that model high-level data concepts by using architectures of multiple non-linear transformations. Deep-learning architectures such as deep neural networks (DNN) and convolutional neural networks (CNN) have already been applied successfully to fields like speech recognition.

Several companies, such as tech giant Nvidia and start up Drive.ai are developing AI systems for autonomous vehicles.

Ford Investment in Argo AI

Traditional manufacturers are getting in the act too, as Ford’s investment in Argo AI demonstrates. The automaker will put $1 billion in the startup over the next five years to capture its robotics experience and develop artificial intelligence software.

Argo will develop a virtual driver system for Ford’s Level 4 autonomous vehicle due for 2021.

In October, Argo AI acquired Princeton Lightwave, a company with extensive experience in the development and commercialization of Lidar sensors. Key targets highlighted by Argo upon the acquisition are increasing the range, resolution and field of view of Lidar sensors and lowering costs in order to manufacture at scale.

Nvidia Drive PX

Originally a computer game chip company, Nvidia is now one of the leading tech firms in the autonomous driving field. Its Drive PX family of AI car computers enable automakers, suppliers and startups to accelerate production of autonomous and automated vehicles. In October, the firm introduced Pegasus, a new member of the Drive PX family.

The Pegasus AI computer can compute 320 trillion deep-learning operations per second. Roughly the size of a license plate, it has the AI performance of a 100-server data center and makes Nvidia the first tech company to offer a complete A/V stack for Level 4 and 5 autonomous driving.

Data is fused from multiple cameras as well as Lidar, radar and ultrasonic sensors. This allows algorithms to accurately understand the full 360-degree environment around the car. The use of deep neural networks (DNN) for the detection and classification of objects dramatically increases the accuracy of the fused sensor data.

Drive.ai

Drive.ai is a Silicon Valley start-up founded in 2015 by former colleagues from Stanford University’s artificial intelligence lab. Drive.ai say it is “building the brain of the self-driving car.”

Drive sees deep learning as the only viable route to fully autonomous vehicles. By structuring its approach to self-driving cars around deep learning, Drive has been able to quickly scale to safely cope with the wide range of conditions and variables that autonomous vehicles need to master. The difference is that while many companies follow a piecemeal approach to deep learning, using it for some components of the system but not all, Drive approaches self driving from a holistic deep-learning perspective.

The hardware used on Drive’s fleet of four test vehicles consists of an array of sensors, cameras and Lidar retrofitted to the roof. The system also takes advantage of the car’s own integrated sensors such as radar and rear cameras. Each vehicle is continuously capturing data for map generation, deep learning and driving tasks as they navigate roads.

One note of caution in the use of deep learning is the so-called black box.

Once the system is ‘trained,’ data are fed in and an interpretation of the data is fed out. But the actual decision-making process in between is not something humans can necessarily understand. This is why many companies use deep learning for perception but are less comfortable using it for decision-making. It’s one of the key issues with AI technology. If the system makes a mistake, it is important to be able to understand why in order to correct it. To counter this problem Drive has broken the system down into parts. Understanding how each of the parts can be validated in different ways provides more confidence in how the system will behave.

Summary

It’s a fascinating time for the motor industry, and the ever-shifting landscape of autonomous technology makes it difficult to predict the winners and losers. Silicon Valley is ideally placed to have a huge impact on the AI and software systems that underpin self-driving vehicles, but any future mass rollout of autonomous cars remains in the realm of the large-scale manufacturers.

One thing that does appear to be certain is that artificial intelligence will play a leading role in the development of Level 4 and Level 5 autonomous cars, and the race is on to produce and demonstrate the most viable, safe and robust systems.

Editor’s note: Trucks.com is the media sponsor of the Autonomous Vehicles Silicon Valley conference. More information about the event follows.

Join next year’s Autonomous Vehicles Silicon Valley event, taking place Feb. 26 – 28, which brings together key stakeholders to discuss how to develop and implement the next generation of autonomous vehicles.

The conference will feature leading industry experts discussing the latest trends and issues in autonomous technology, including technical components from Lidar to cyber security software, robotics and artificial intelligence, regulation and federal legislation, talent recruitment, and public perception.

Courtesy of Trucks.com International